Vertex AI

Important Capabilities

| Capability | Status | Notes |

|---|---|---|

| Descriptions | ✅ | Extract descriptions for Vertex AI Registered Models and Model Versions |

Ingesting metadata from VertexAI requires using the Vertex AI module.

Prerequisites

Please refer to the Vertex AI documentation for basic information on Vertex AI.

Credentials to access to GCP

Please read the section to understand how to set up application default Credentials to GCP GCP docs.

Create a service account and assign roles

Setup a ServiceAccount as per GCP docs and assign the previously created role to this service account.

Download a service account JSON keyfile.

- Example credential file:

{

"type": "service_account",

"project_id": "project-id-1234567",

"private_key_id": "d0121d0000882411234e11166c6aaa23ed5d74e0",

"private_key": "-----BEGIN PRIVATE KEY-----\nMIIyourkey\n-----END PRIVATE KEY-----",

"client_email": "test@suppproject-id-1234567.iam.gserviceaccount.com",

"client_id": "113545814931671546333",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/test%suppproject-id-1234567.iam.gserviceaccount.com"

}To provide credentials to the source, you can either:

Set an environment variable:

$ export GOOGLE_APPLICATION_CREDENTIALS="/path/to/keyfile.json"or

Set credential config in your source based on the credential json file. For example:

credential:

private_key_id: "d0121d0000882411234e11166c6aaa23ed5d74e0"

private_key: "-----BEGIN PRIVATE KEY-----\nMIIyourkey\n-----END PRIVATE KEY-----\n"

client_email: "test@suppproject-id-1234567.iam.gserviceaccount.com"

client_id: "123456678890"

Integration Details

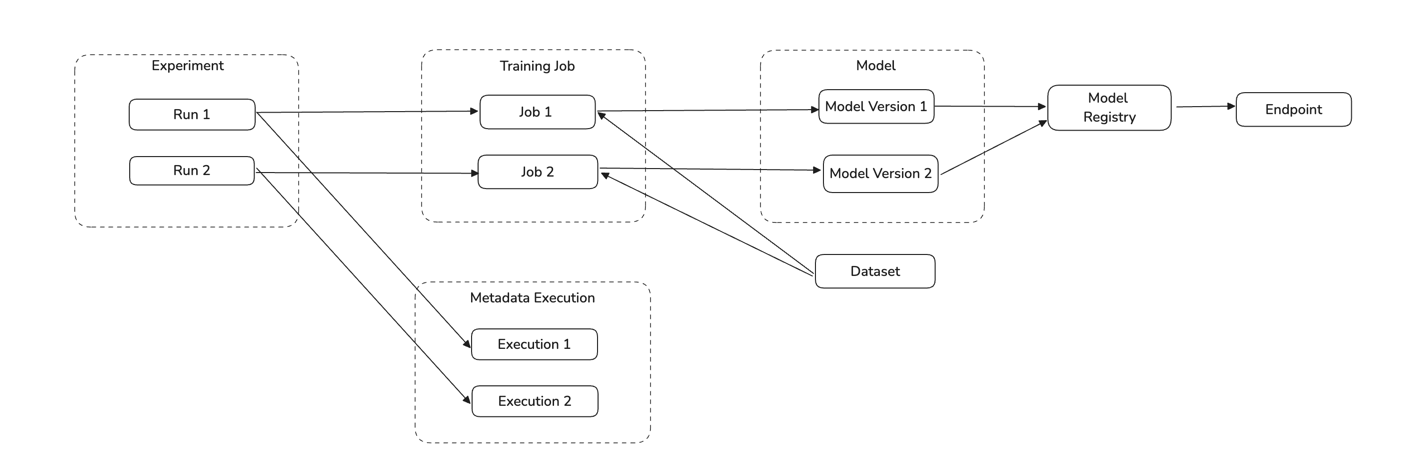

Ingestion Job extract Models, Datasets, Training Jobs, Endpoints, Experiment and Experiment Runs in a given project and region on Vertex AI.

Concept Mapping

This ingestion source maps the following Vertex AI Concepts to DataHub Concepts:

| Source Concept | DataHub Concept | Notes |

|---|---|---|

Model | MlModelGroup | The name of a Model Group is the same as Model's name. Model serve as containers for multiple versions of the same model in Vertex AI. |

Model Version | MlModel | The name of a Model is {model_name}_{model_version} (e.g. my_vertexai_model_1 for model registered to Model Registry or Deployed to Endpoint. Each Model Version represents a specific iteration of a model with its own metadata. |

| Dataset | Dataset | A Managed Dataset resource in Vertex AI is mapped to Dataset in DataHub. Supported types of datasets include ( Text, Tabular, Image Dataset, Video, TimeSeries) |

Training Job | DataProcessInstance | A Training Job is mapped as DataProcessInstance in DataHub. Supported types of training jobs include ( AutoMLTextTrainingJob, AutoMLTabularTrainingJob, AutoMLImageTrainingJob, AutoMLVideoTrainingJob, AutoMLForecastingTrainingJob, Custom Job, Custom TrainingJob, Custom Container TrainingJob, Custom Python Packaging Job ) |

Experiment | Container | Experiments organize related runs and serve as logical groupings for model development iterations. Each Experiment is mapped to a Container in DataHub. |

Experiment Run | DataProcessInstance | An Experiment Run represents a single execution of a ML workflow. An Experiment Run tracks ML parameters, metricis, artifacts and metadata |

Execution | DataProcessInstance | Metadata Execution resource for Vertex AI. Metadata Execution is started in a experiment run and captures input and output artifacts. |

Vertex AI Concept Diagram:

Lineage

Lineage is emitted using Vertex AI API to capture the following relationships:

- A training job and a model (which training job produce a model)

- A dataset and a training job (which dataset was consumed by a training job to train a model)

- Experiment runs and an experiment

- Metadata execution and an experiment run

CLI based Ingestion

Starter Recipe

Check out the following recipe to get started with ingestion! See below for full configuration options.

For general pointers on writing and running a recipe, see our main recipe guide.

source:

type: vertexai

config:

project_id: "acryl-poc"

region: "us-west2"

# You must either set GOOGLE_APPLICATION_CREDENTIALS or provide credential as shown below

# credential:

# private_key: '-----BEGIN PRIVATE KEY-----\\nprivate-key\\n-----END PRIVATE KEY-----\\n'

# private_key_id: "project_key_id"

# client_email: "client_email"

# client_id: "client_id"

sink:

type: "datahub-rest"

config:

server: "http://localhost:8080"

Config Details

- Options

- Schema

Note that a . is used to denote nested fields in the YAML recipe.

| Field | Description |

|---|---|

project_id ✅ string | Project ID in Google Cloud Platform |

region ✅ string | Region of your project in Google Cloud Platform |

bucket_uri string | Bucket URI used in your project |

vertexai_url string | VertexUI URI |

env string | The environment that all assets produced by this connector belong to Default: PROD |

credential GCPCredential | GCP credential information |

credential.client_email ❓ string | Client email |

credential.client_id ❓ string | Client Id |

credential.private_key ❓ string | Private key in a form of '-----BEGIN PRIVATE KEY-----\nprivate-key\n-----END PRIVATE KEY-----\n' |

credential.private_key_id ❓ string | Private key id |

credential.auth_provider_x509_cert_url string | Auth provider x509 certificate url |

credential.auth_uri string | Authentication uri |

credential.client_x509_cert_url string | If not set it will be default to https://www.googleapis.com/robot/v1/metadata/x509/client_email |

credential.project_id string | Project id to set the credentials |

credential.token_uri string | Token uri Default: https://oauth2.googleapis.com/token |

credential.type string | Authentication type Default: service_account |

The JSONSchema for this configuration is inlined below.

{

"title": "VertexAIConfig",

"description": "Any source that produces dataset urns in a single environment should inherit this class",

"type": "object",

"properties": {

"env": {

"title": "Env",

"description": "The environment that all assets produced by this connector belong to",

"default": "PROD",

"type": "string"

},

"credential": {

"title": "Credential",

"description": "GCP credential information",

"allOf": [

{

"$ref": "#/definitions/GCPCredential"

}

]

},

"project_id": {

"title": "Project Id",

"description": "Project ID in Google Cloud Platform",

"type": "string"

},

"region": {

"title": "Region",

"description": "Region of your project in Google Cloud Platform",

"type": "string"

},

"bucket_uri": {

"title": "Bucket Uri",

"description": "Bucket URI used in your project",

"type": "string"

},

"vertexai_url": {

"title": "Vertexai Url",

"description": "VertexUI URI",

"default": "https://console.cloud.google.com/vertex-ai",

"type": "string"

}

},

"required": [

"project_id",

"region"

],

"additionalProperties": false,

"definitions": {

"GCPCredential": {

"title": "GCPCredential",

"type": "object",

"properties": {

"project_id": {

"title": "Project Id",

"description": "Project id to set the credentials",

"type": "string"

},

"private_key_id": {

"title": "Private Key Id",

"description": "Private key id",

"type": "string"

},

"private_key": {

"title": "Private Key",

"description": "Private key in a form of '-----BEGIN PRIVATE KEY-----\\nprivate-key\\n-----END PRIVATE KEY-----\\n'",

"type": "string"

},

"client_email": {

"title": "Client Email",

"description": "Client email",

"type": "string"

},

"client_id": {

"title": "Client Id",

"description": "Client Id",

"type": "string"

},

"auth_uri": {

"title": "Auth Uri",

"description": "Authentication uri",

"default": "https://accounts.google.com/o/oauth2/auth",

"type": "string"

},

"token_uri": {

"title": "Token Uri",

"description": "Token uri",

"default": "https://oauth2.googleapis.com/token",

"type": "string"

},

"auth_provider_x509_cert_url": {

"title": "Auth Provider X509 Cert Url",

"description": "Auth provider x509 certificate url",

"default": "https://www.googleapis.com/oauth2/v1/certs",

"type": "string"

},

"type": {

"title": "Type",

"description": "Authentication type",

"default": "service_account",

"type": "string"

},

"client_x509_cert_url": {

"title": "Client X509 Cert Url",

"description": "If not set it will be default to https://www.googleapis.com/robot/v1/metadata/x509/client_email",

"type": "string"

}

},

"required": [

"private_key_id",

"private_key",

"client_email",

"client_id"

],

"additionalProperties": false

}

}

}

Code Coordinates

- Class Name:

datahub.ingestion.source.vertexai.vertexai.VertexAISource - Browse on GitHub

Questions

If you've got any questions on configuring ingestion for Vertex AI, feel free to ping us on our Slack.